Generative AI and LLMs are all the rage right now! They’ve taken the world by storm and no one seems to know how to approach this issue responsibly. Like many security professionals, I understand the impulse to take a ‘Ron Swanson’ approach and discard all these AI models. However, we believe that there is an extremely powerful service that Generative AI can provide when used responsibly.

It’s no secret that AI is a hot button topic that leaves many organizations and security teams struggling to balance between security and productivity. Today, we’re going to explore the world of Generative AI and LLMs and how we can protect ourselves against risks, while maximizing productivity.

What is a Large Language Model (LLM)?

Large Language Models, also known as LLMs, are programs trained using machine learning (including billions of articles, books, songs, forum posts, etc.). These models intake the vast amount of training data and then use them to formulate and “generate” (hence the term Generative AI) responses to prompts. The cost to train an LLM scales with the size and quality of the dataset, as well as the computational resources required to train the LLM on the data.

For example, OpenAI’s CEO reported that GPT-4 cost over 100 million dollars to develop.

Cloudflare has a great article that dives into greater detail, but in short: LLMs are programs that are “taught” how to generate text responses to prompts by being spoon-fed absolutely massive amounts of data by millions of dollars worth of hardware.

Why are LLMs so useful?

Great, so we’ve begun setting the foundation for the Ancestor Cores of the Leagues of Votann. Now all we need to do is make sure that the Emperor of Mankind learns to completely abandon his future sons and we’ll be set.

Warhammer 40k jokes aside, what makes LLMs so useful, and how do they differ from “other” kinds of AI?

LLMs like ChatGPT, BingAI, and Gemini possess one key trait that allowed them to take the world by storm: LLMs are ridiculously easy to interface with. Instead of manually interviewing hundreds of people, writing complex queries to sift through thousands of database tables, or using business intelligence systems to analyze massive datasets, you can simply… ask. Your question can be as simple or complex as you want. In return, instead of gathering massive amounts of information and manually interpreting thousands of data points to determine your answer, the LLM outputs something that just makes sense. This is the power of AI.

Let’s take a look at a few strengths in greater detail:

1. Powerful Information Retrieval:

- Generative AI and LLMs can sift through massive datasets, acting like super-powered search engines. They understand complex queries and can identify relevant information even if it’s phrased differently than how it’s stored.

- Imagine searching for “causes of the Roman Empire’s fall” and the AI pulls up not just historical events but also economic factors and cultural shifts mentioned in various sources.

2. Question Answering and Inference:

- Unlike traditional search engines that return web pages, articles, or documents, Generative AI and LLMs can answer your questions directly, even if the answer isn’t explicitly stated in the data.

- They can make inferences and connections between different pieces of information, providing a deeper understanding of a topic.

3. Natural Language Interaction:

- Generative AI and LLMs allow us to interact with information in a natural, conversational way. You can ask follow-up questions, clarify doubts, and get more specific information without needing complex search queries.

4. Tailored Information Delivery:

- Generative AI and LLMs can personalize information retrieval based on your background knowledge and interests. This helps you zero in on the important information by excluding irrelevant details.

These are just four reasons why Generative AI and LLMs are as powerful as they are. And you know what? Surprise, I used Google’s Gemini to help generate them! This is the power of LLMs.

![]()

What are some risks associated with LLMs, and how do I protect myself?

Unfortunately, Generative AI and LLMs aren’t omnipotent paragons of immutable truth and honesty. They have limitations and risks that you need to be aware of when interacting with them.

All LLMs are different and have their unique strengths and weaknesses. The data they are trained on and the parameters used for configuration influence the characteristics a given LLM. Some LLMs are specifically fine-tuned for content creation, while others are configured to assist with research, etc. Let’s take a look at a few near-universal risks that you may encounter when using any LLM:

1. Prompt Engineering:

LLMs function on an input > output basis. You input a prompt, it outputs a response. What people have quickly discovered is that you can be very specific or intentional about how you format your prompt in order to get a specific output, even if it goes against the LLMs directives and parameters. We call this concept prompt engineering. A Chevrolet Dealership discovered the consequences of an ill-configured LLM when a potential customer tricked their AI into committing to sell a car to him for just $1. It’s well worth the read, I promise.

When engaging with an LLM, you want to be careful with how you word your prompts because one can engage in prompt engineering, even if they’re not intentionally attempting to manipulate the AI. A poorly worded or designed prompt can lead to inaccurate, misleading, or biased outputs. Be intentional about what you ask, and how you ask it, to make sure that you get the information you are searching for.

2. Hallucinations:

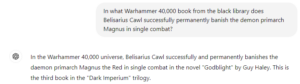

LLMs do not actually know what they’re talking about. They’re ultimately algorithms that have been trained to find patterns in the petabytes of data they’ve been fed, and then rely on those patterns to generate their responses. Unfortunately, those patterns can and do cause the LLM to output faulty information. Check out this example where I receive a hallucination from ChatGPT:

In truth, Belisarius Cawl never faces Magnus and permanently banishes him in single combat anywhere in the 40k collection of literary works! However, ChatGPT neither knows nor cares about the truth. It cares about satisfying its algorithmic need to produce a satisfying output, so it hallucinates an answer by drawing on familiar context clues. “Godblight” is indeed a book, and it does closely follow a primarch, but not Magnus. It follows Roboute Guilliman, for anyone interested.

How can you protect yourself against AI hallucinations? The answer is simple: Don’t trust AI to generate accurate information. Do your own research to validate whatever the LLM you’re using generates. Use tools like Gemini, ChatGPT, and BingAI to supplement and assist your workflow, not replace it.

3. Data Ingesting:

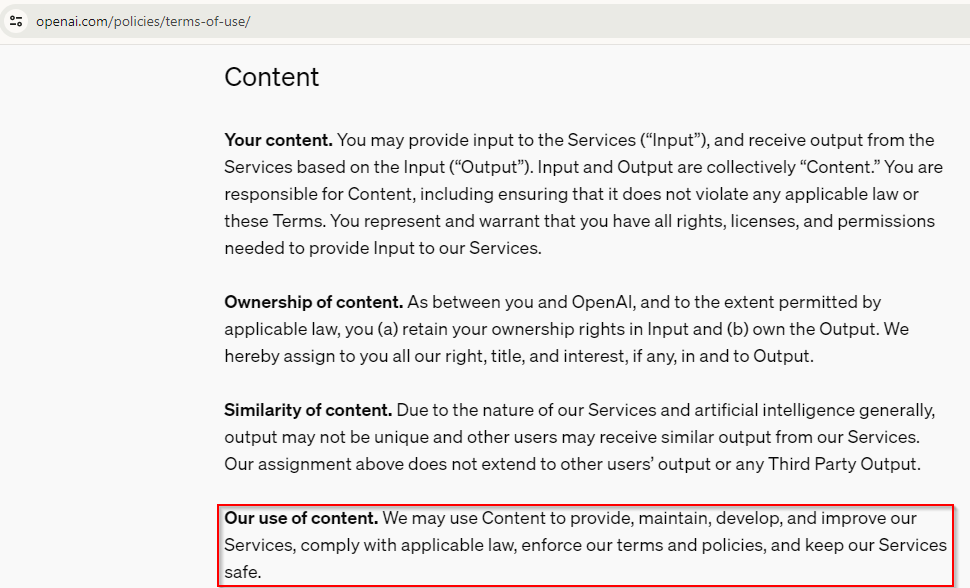

We explored the concept of LLMs being programmed with vast datasets earlier in this article. To constantly improve their LLMs, organizations rely on user input as one of their tools. When you engage with an LLM, the system is studying your inputs and how you engage with the LLM. Let’s take a look at Open AI’s terms of use:

While you retain ownership of the rights to the immediate input and output, OpenAI retains the right to use your Content (including everything you input) to develop and improve their Services. This is not an issue for simple queries and explorations using ChatGPT. However, it also means that anything sensitive or confidential uploaded into ChatGPT will be assimilated into the LLMs hive mind. Here is an example: if a member of the HR department in your business uploads a confidential Excel spreadsheet holding internal compensation information, that data will be replicated across countless OpenAI datacenters. Your business has just lost control that data. Now, OpenAI is free to use that data to further train ChatGPT. These TOS clauses hold massive risks for businesses of all sizes who aren’t careful about what their workers feed into public AI models.

There are several ways you can protect yourself against accidentally providing a public LLM with confidential information:

-

Don’t feed it confidential information!

Just… Don’t. Engage with the AI, but avoid directly uploading or feeding internal company data. Instead, sanitize any information you want to feed by removing anything that identifies sensitive information, including the sensitive information itself. -

If possible, use approved, business-specific AI models.

Many LLMs feature paid or business variants that specifically feature protections for any data uploaded into that model. For example, Microsoft has released variant of Copilot that features commercial data protection. This variant protects all company data you upload and ensures it’s not used to train the model. If your org uses Microsoft 365, try to use this business variant instead of the publicly available variant of copilot. -

Don’t engage with AI at all if your use case doesn’t require it.

I know. AI is all the rage right now. We can’t help but want to engage with AI, especially after discovering some ways that it can significantly speed up certain processes. However, it’s worth noting that just because a tool is available to use doesn’t mean it’s the most effective or even correct tool to use. -

Opt-out of data collection when possible.

If you absolutely must utilize a public LLM model, try to opt-out of any data collection settings that you can. This ensures your data isn’t ingested by the AI and used to train the model further. Check out this article from Wired to learn more about which and how different major AI’s allow you to disable data collection.

As a lightning quick reference, Google’s Gemini and OpenAI’s ChatGPT and Dall-E services provide built-in options to opt-out. Unfortunately, Microsoft’s public Copilot AI does not have such options as of the writing of this article.

What can businesses do to responsibly use LLMs?

The most impactful steps any business can make when moving towards more securely engaging with AI is to implement two kinds of controls that we’ll call people and technical controls.

- People controls. What exactly are people controls? Simply put, people controls are systems that companies can put in place to educate and guide their employees towards using AI appropriately. For example, we recommend that businesses of all sizes implement formal Generative AI policies and procedures. These policies should establish guidelines and acceptable use cases for the utilization of AI.

- Technical controls. Technical controls approach the issue from a technological standpoint. Technical controls can include things like outright blocking public LLM models from being accessible on company devices. Another example is to automatically redirect users towards approved business-specific AI models.

Each business is unique in its composition and requirements. Some businesses will not have any practical use for any kind of AI tool. Other businesses that adopt AI experience a surge in productivity, reaching an entirely new level. Here at Attainable Security, we believe in empowering businesses to take advantage of Generative AI securely and responsibly. Part of this includes helping businesses that wish to leverage AI develop and implement appropriate people and technical controls. If you’re interested in how we can help your business succeed in the age of AI, reach out to us to learn more!

Hopefully, this article helped clarify some of the risks that large language models pose, as well as how you and your business can take up a position that’s ready to take responsible advantage of this amazing new AI-powered world.